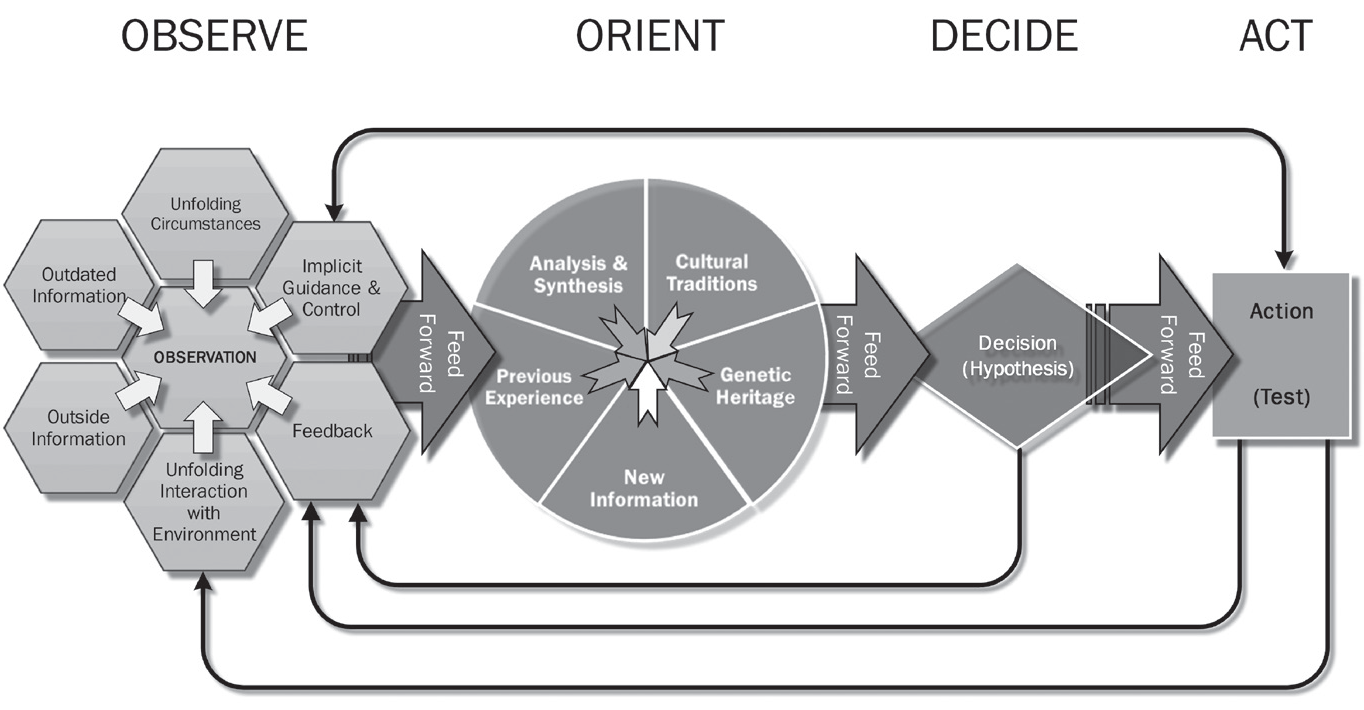

Machine learning operationalization has come to be seen as the industrial holy grail of practical machine learning. And that isn’t a bad thing – for data to eventually be useful to organizations, we need a way to bring models to business users and decision makers. I’ve come to understand the value of machine learning for decision making from the specific context of tactical decision making as fitting into the common OODA loop for taking decisions. At first sight, these seem like specific ideas meant for a business and enterprise context, but further exploration below will reveal why this could be an important pattern to observe in ML-enabled decision making.

Lazy Leaders of a Specific Kind

I recently listened to a Simon Sinek talk in which he made make a rather bold statement (among the many others he’s made) – “Rules are for lazy leaders”. I see this phrase from the specific lens of using machine learning solutions as part of a decision making task – building which is almost existential to the machine learning practitioner’s workflow today. What I mean here, is that leaders who think in terms of systems rarely rely on one rule, but usually think in terms of how a system evolves and changes, and what is required for them to execute their OODA (“Observe-Orient-Decide-Act”) loop. I want to suggest that machine learning can create a generation of lazy leaders, and I mean “lazy” here in a very specific way.

Observe-Orient-Decide-Act

A less-well-known sister of the popular Deming PDCA (Plan-Do-Check-Act) cycle, OODA was first developed for the battlefield, where there is (a) a fog of war – characterized by high information asymmetry, (b) a constantly changing and evolving decision making environment, (c) strong penalties for bad decisions, (d) risk minimization as a default expectation.

These four criteria and other criteria make battlefield decision making extremely tactical. Strategy is for generals and politicians who have the luxury of having a window into the battlefield (whence they collect data) and have the experience to make sense of all this in the context of the broader war beyond the one front or battle. Organizations are much the same for decision makers.

As of 2021, decision making that uses machine learning systems has largely impacted the tactical roles, where there are decisions made on a routine basis with a days-long, hours-long or minutes-long decision window. Andrew Ng famously summarized this in his “AI is the new electricity” talk – where he discusses the (unreasonable) effectiveness of machine learning for tasks that require complex decision making in a short time frame.

Sometimes, strategic and tactical decisions are made on the same times scales, but this is rarely the case outside of smaller organizations. Any organization at scale naturally sees this schism in decision making scales develop. OODA is ideal for decision makers that are domain experts, in this limited tactical decision making setting. Such decision makers are rarely domain experts, but are adept functional experts who understand the approach to and the implications of success in the environment they’re in.

So where does machine learning operationalization fit into all this? Today’s decision making environment is data-centric and information-centric in all enterprises. What this means for the typical decision maker or manager, is that they are often faced with information overload and high cognitive load. When you’re making decisions that have dollar-value impact on the enterprise, cognitive load can be a bane beyond a point. Being cognitively loaded is absolutely necessary for any activity up until a certain point, when the tedium of decision making under uncertainty can affect decision makers emotionally, causing the fight-or-flight response, or can trigger other set behaviours or conditioned responses.

This brings us to the information-rich nature of today’s decision making environment. When we’re dealing as decision makers with lower level metrics of a system’s performance and are expected to build an intervention or take a decision based on these lower level metrics, we are rarely able to reason well in terms of more than a few such variables. The best decision makers still end up thinking in terms of simple patterns most of the time, and rarely broach complex patterns of metrics in their decision making processes.

Machine learning enables us to model systems by transforming the underlying low-level metrics of their performance into metrics of higher level abstractions. In essence, this is simplification of complex behaviour that requires high cognitive load to process. Crucially, we are changing the language with which we take decisions, thanks to the development of machine learning models. For instance, when we are looking at a stock price ticker and are able to reason about it in terms of confidence interval estimates, we’re doing something a little more sophisticated than thinking in simplistic terms such as “Will the stock go up tomorrow?”, and are probably dealing with a bounded forecast spanning several time periods. When we’re analyzing video feeds manually to look for specific individuals in a perimeter security setting, we ask the question “Have I seen this person elsewhere” – but when doing this with machine learning, we ask the question of whether similar or the same people are being identified at different time stamps in that video feed. We’re consequently able to reason about the decision we ought to make in terms of higher level metrics of the underlying system, and in terms of more sophisticated patterns. This has significant implications in terms of reducing cognitive load, allowing us to do more complex work with less time, and crucially, with less specialized skill or intelligence for executing that task, even if we possess a complex understanding of the decisions we take.

The Limits of our Decision Making Language

I want to argue here in a rather Wittgensteinian vein, that humans are great at picking up the fundamentals of complex systems rather intuitively if the language they use to represent ideas about these systems can be conveyed in a simplistic manner. Take a ubiquitous but well-known complex system – the water cycle. Most kids can explain it reasonably accurately (even if they don’t possess intricate deep knowledge of the underlying processes), because they intuitively understand phase changes in the state of water and the roles of components in the cycle such as trees, clouds and water bodies. They don’t need to understand, say, the anomalous expansion of water, or the concept of latent heat, in order to understand the overall process of evaporation, the formation of clouds and the production of rain and water bodies as a consequence of these.

OODA and other decision making cycles can be amplified by operationalized machine learning systems. ML systems are capable of modeling complex system behaviour in terms of higher level abstractions of systems. This has significant implications for reducing cognitive load in decision making systems. Machine learning operationalization done through MLOps can therefore have significant implications for decision making effectiveness on a tactical basis for data-driven organizations.

Implications and Concluding Remarks

Machine learning and the development of sophisticated reasoning about systems could lead to the resurgence of the generalist and the AI-enabled decision-making savant with broad capabilities and deep impact. For centuries, greater specialization has been the way capitalism and free markets have evolved and been able to add value. This has had both advantages and disadvantages – while it allowed developing societies and countries to rise out of poverty by the development of specialized skill, it also meant decelerating returns for innovative products, and more seriously, led to exploitative business practices that sustained free markets. Machine learning systems if applied well can have far reaching positive implications and free up large numbers of specialists from tedium, enable them to tackle broader and more sophisticated problems, while simultaneously improving their productivity. As a consequence, ML and smart decision enablers such as this may be able to bring even more people from developing nations in Asia, Africa and elsewhere into the industrial and information age.

Leave a comment